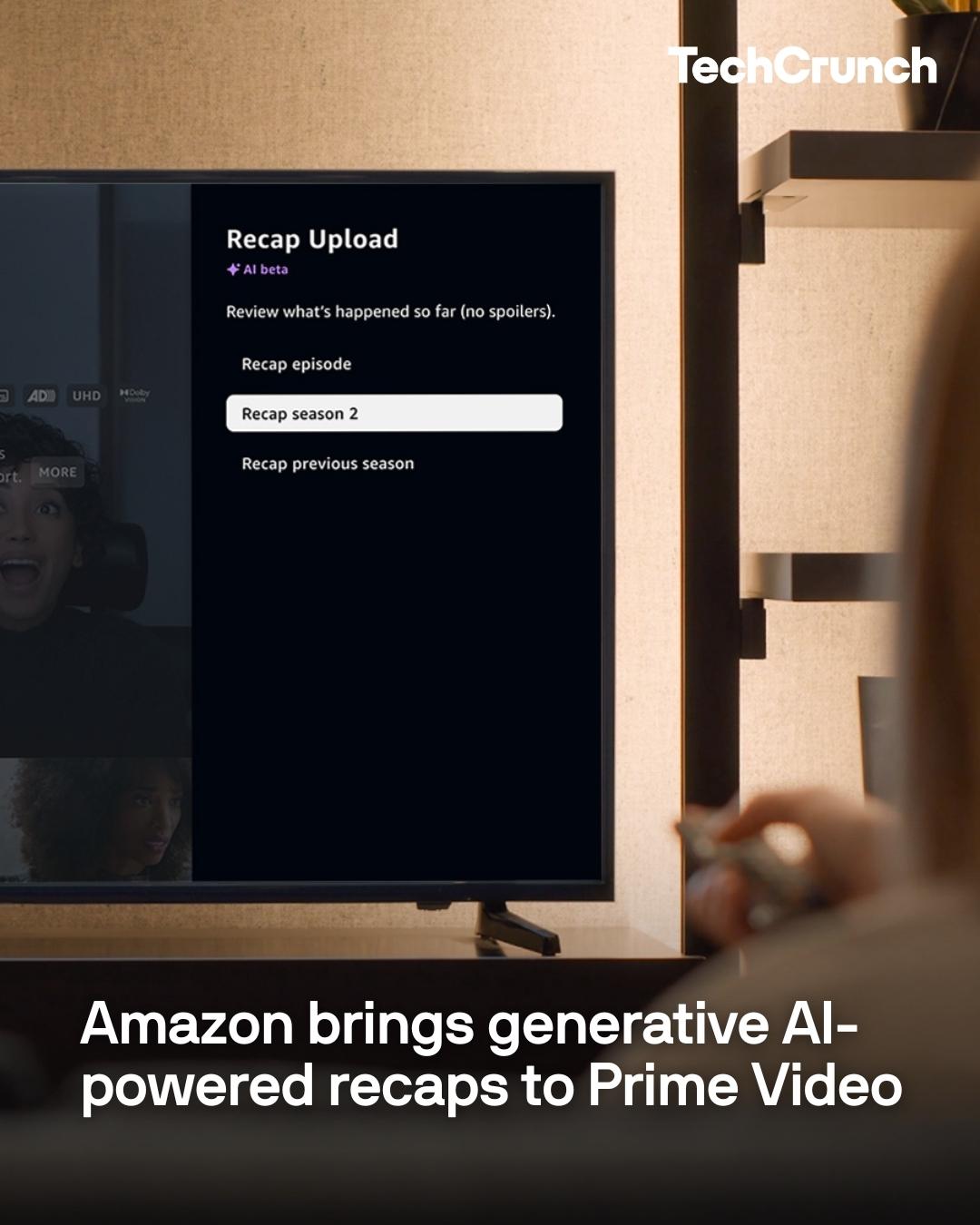

Prime Video’s latest feature aims to save viewers from encountering any spoilers.

Amazon announced Monday the launch of “X-Ray Recaps,” a generative AI-powered feature that creates concise summaries of entire seasons, single episodes, and even parts of episodes.

Notably, the company claims that guardrails were put in place to ensure the AI doesn’t generate spoilers, so you can fully enjoy your favorite series without the anxiety of stumbling upon unwanted information.

The new feature is an expansion of the streamers’ existing X-Ray feature, which displays information when you pause the screen, such as details about the cast and other trivia.

Read more on X-Ray Recaps at the link in the bio

Article by Lauren Forristal

Image Credits: Amazon

#TechCrunch #technews #artificialintelligence #Amazon #generativeAI #AmazonPrime

Amazon announced Monday the launch of “X-Ray Recaps,” a generative AI-powered feature that creates concise summaries of entire seasons, single episodes, and even parts of episodes.

Notably, the company claims that guardrails were put in place to ensure the AI doesn’t generate spoilers, so you can fully enjoy your favorite series without the anxiety of stumbling upon unwanted information.

The new feature is an expansion of the streamers’ existing X-Ray feature, which displays information when you pause the screen, such as details about the cast and other trivia.

Read more on X-Ray Recaps at the link in the bio

Article by Lauren Forristal

Image Credits: Amazon

#TechCrunch #technews #artificialintelligence #Amazon #generativeAI #AmazonPrime

Prime Video’s latest feature aims to save viewers from encountering any spoilers.

Amazon announced Monday the launch of “X-Ray Recaps,” a generative AI-powered feature that creates concise summaries of entire seasons, single episodes, and even parts of episodes.

Notably, the company claims that guardrails were put in place to ensure the AI doesn’t generate spoilers, so you can fully enjoy your favorite series without the anxiety of stumbling upon unwanted information.

The new feature is an expansion of the streamers’ existing X-Ray feature, which displays information when you pause the screen, such as details about the cast and other trivia.

Read more on X-Ray Recaps at the link in the bio 👆

Article by Lauren Forristal

Image Credits: Amazon

#TechCrunch #technews #artificialintelligence #Amazon #generativeAI #AmazonPrime

·253 Views

·0 voorbeeld